In the rapidly evolving world of digital marketing, Search Engine Optimization (SEO) plays a vital role in ensuring that your website gets the visibility it deserves. One of the most crucial components of SEO is understanding how search engines like Google index and rank websites. This is where the Google bot comes into play. But what exactly is the Google bot, and why is it so important for your website’s SEO?

In this post, we’ll dive deep into what the Google bot is, how it functions, and its crucial role in enhancing your website’s SEO performance. Let’s break it down!

What is Googlebot?

Googlebot is Google’s web crawler or spider that systematically browses the web, collects data, and indexes it in Google’s database. This allows Google to quickly provide relevant search results when users enter queries. Googlebot visits websites, scans their content, and evaluates its relevance based on a variety of factors, such as keywords, content quality, and website structure.

Without Googlebot, Google wouldn’t be able to provide search results. The bot is responsible for discovering all the pages across the internet, from the most prominent to the most obscure, and adding them to Google’s search index.

What is a Web Crawler? How Google’s Crawler & Indexing Works

A web crawler, like Googlebot, is an automated tool used by search engines to navigate the web. Crawlers visit different websites, extract data from web pages, and index it for future use. The process can be broken down into two key stages:

- Crawling – This is when Googlebot visits a website to discover new pages or updates to existing pages. The bot follows the links it finds on a webpage, essentially creating a roadmap of the web.

- Indexing – After crawling the content, Googlebot indexes the page, storing its data in Google’s database. This indexed information is then used to match users’ search queries with relevant web pages.

This entire process is fundamental to SEO because only websites that are properly crawled and indexed by Google can appear in search results. If a website isn’t crawled, it will never appear in Google’s index and, therefore, won’t show up in search results.

Different Robots and Crawlers

While Googlebot is the most well-known web crawler, it is not the only one out there. There are several other crawlers used by different search engines, such as Bing and Yahoo. Here are a few notable examples:

- Bingbot: Used by Microsoft’s Bing search engine to crawl and index web pages.

- Slurp: Yahoo’s web crawler that discovers and indexes new content for Yahoo Search.

- Baiduspider: A web crawler used by the Chinese search engine Baidu.

Googlebot, however, is unique in its sophisticated algorithms and extensive use across the web. Understanding how Googlebot works can give you a distinct advantage in crafting your SEO strategies.

How Google Bot is Important for Your SEO

Googlebot plays a central role in SEO. If Googlebot doesn’t crawl and index your website correctly, it won’t show up in search engine results, no matter how well-optimized your content is. This makes the Googlebot integral to your SEO strategies. Here’s why:

- Improved Crawl Efficiency – Googlebot’s efficiency in crawling your website directly affects how quickly new content is indexed. A fast and efficient crawl can result in your website getting updated on Google’s index more quickly.

- Proper Indexing – If Googlebot encounters issues while crawling your site, it may not index all your pages. This means your site will miss out on valuable search rankings. Ensuring that Googlebot indexes your site properly is key to maintaining good SEO health.

Ranking Signals – Googlebot collects data related to several ranking signals, such as the quality of your content, page speed, mobile optimization, and user experience. These factors influence where your site ranks on Google’s SERPs.

How Googlebot Visits Your Site

Googlebot uses a methodical process to visit websites. It follows links from one page to another, collecting data along the way. Here’s a closer look at how Googlebot visits your website:

- Starting Points: Googlebot begins by visiting the most important or well-known pages of a site. These could be the homepage or any pages that are frequently linked to from other sites.

- Following Links: Once Googlebot visits a page, it follows the internal and external links on that page, discovering additional pages.

- Crawling Depth: Googlebot doesn’t crawl every link on a website. It prioritizes pages that are more relevant or have more backlinks. If your page has fewer or no internal links pointing to it, Googlebot might miss it.

- Frequency of Crawling: Googlebot revisits websites at regular intervals, but the frequency can depend on how often your content is updated or how authoritative your website is. High-traffic or content-heavy websites tend to be crawled more frequently.

By understanding how Googlebot navigates your site, you can ensure your website is optimized for easy crawling and efficient indexing.

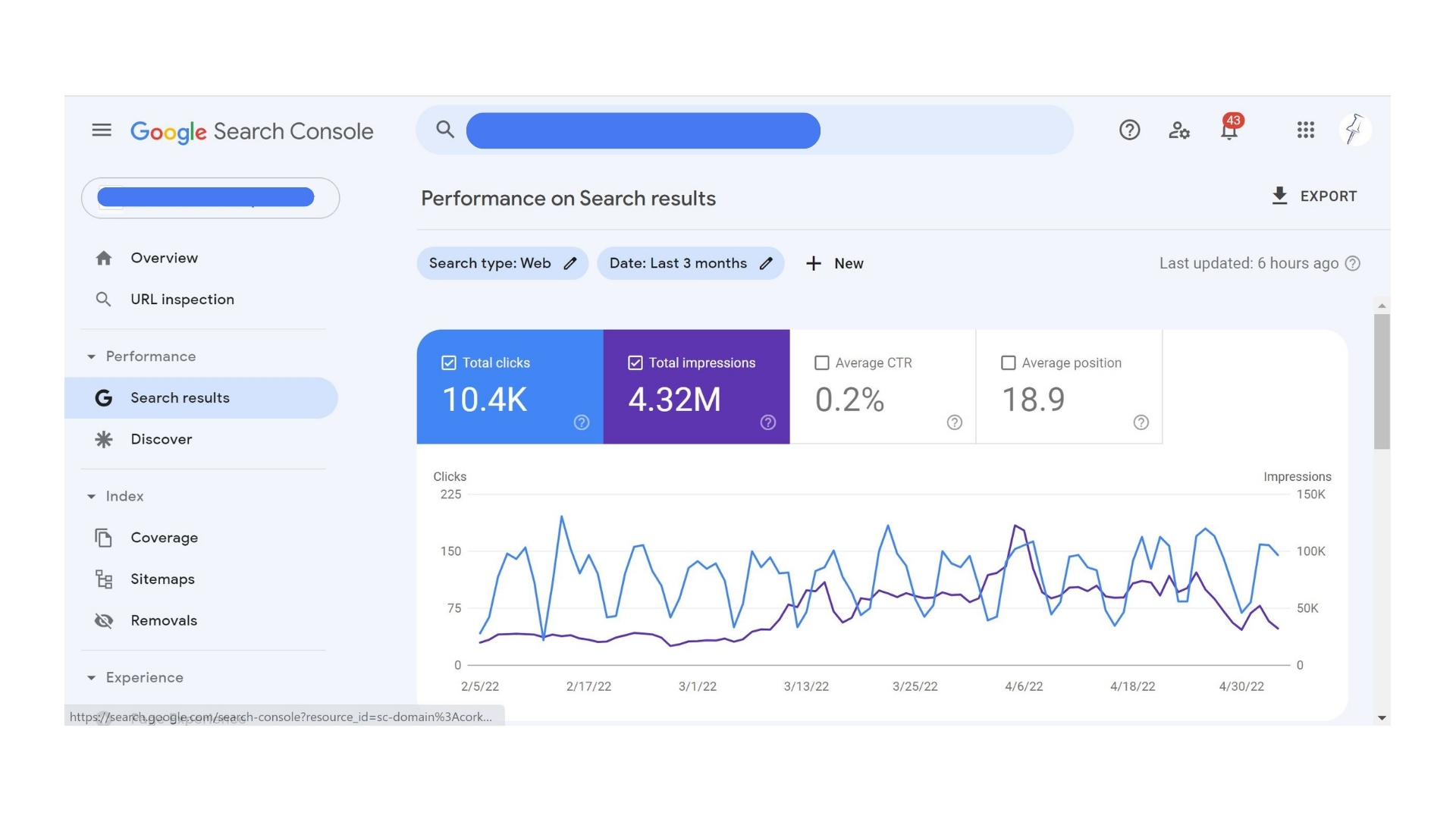

Google Search Console

One of the best tools for monitoring and enhancing your website’s interaction with Googlebot is Google Search Console. This free tool provides essential insights into how Googlebot is crawling your site. With Google Search Console, you can:

- Monitor Crawl Errors: If Googlebot encounters any problems while crawling your site (e.g., broken links, missing pages), Search Console will alert you.

- Submit Sitemaps: You can submit a sitemap of your website to help Googlebot crawl and index all your pages more efficiently.

- Analyze Performance: You can track which pages are being indexed and how they’re performing in search results, allowing you to adjust your SEO strategies accordingly.

Having a solid understanding of Googlebot’s behavior and using Google Search Console to monitor it can help you optimize your website and improve its ranking on Google.

Optimization for Googlebot

To make sure Googlebot efficiently crawls and indexes your site, you need to implement various optimizations. Here are some tips to ensure your website is fully optimized for Googlebot:

- Improve Site Speed: Googlebot tends to favor websites that load quickly. Compress images, minimize code, and use caching to improve your site’s speed.

- Mobile Optimization: Google uses mobile-first indexing, meaning it primarily considers the mobile version of your website for ranking. Ensure that your website is mobile-friendly and optimized.

- Use a Clear URL Structure: Googlebot favors websites with a clear, logical URL structure. Avoid complex URLs with unnecessary parameters, and make sure your site’s pages are easy to navigate.

- Create Quality Content: Googlebot evaluates the quality of your content. Make sure your pages are informative, relevant, and contain the right keywords.

- Avoid Blocking Googlebot: In some cases, website owners may mistakenly block Googlebot using a robots.txt file or other tools. Double-check to ensure that important pages are not being blocked.

By following these best practices, you’ll help Googlebot crawl and index your pages effectively, which will ultimately improve your website’s SEO.

Conclusion

The success of your website’s SEO depends on your ability to comprehend Googlebot’s function. Googlebot is the key to ensuring that your content gets discovered, crawled, and indexed, which is essential for ranking in Google’s search results. By optimizing your website for Googlebot and using tools like Google Search Console, you can enhance your SEO strategies and improve your website’s visibility on the web.

Ultimately, the better you understand how Google bot works, the better you can optimize your site to rank higher in search results and reach a wider audience.