Introduction of robots.txt

The internet is vast, with countless websites and pages. Search engines, such as Google, use bots (also known as crawlers or spiders) to navigate this vast landscape, indexing content for users. However, not all parts of a website are meant to be crawled or indexed. The robots.txt file is useful in this situation. This small but essential file allows website owners to communicate with search engine bots, specifying which parts of their site should or should not be crawled.

What exactly is a robots.txt file used for?

A website’s root directory contains a plain text file called robots.txt. It serves as a guideline for search engine crawlers, helping them understand which areas of the site they can access and which areas are restricted. This file can be used to:

- Prevent crawlers from accessing sensitive or irrelevant sections of a website (e.g., admin pages or private directories).

- Manage crawl budget by directing bots to the most important content.

- Avoid duplicate content issues by restricting access to certain versions of a page.

Understand the limitations of a robots.txt file

While a robots.txt file is a useful tool, it’s important to recognize its limitations:

- Not a Security Measure: Robots.txt only guides well-behaved crawlers. Malicious bots can ignore it and still access restricted areas.

- Non-Binding Instructions: Search engines may not always respect robots.txt directives, particularly if they conflict with other site signals.

No Guarantee of Privacy: Restricted content can still appear in search results if linked elsewhere on the web.

How to Create and Submit a Robots.txt file

A well-crafted robots.txt file is key to maintaining control over your website’s indexing. Here’s a step-by-step guide to creating and submitting one.

Basic guidelines for creating a robots.txt file

- Use a plain text editor, such as Notepad or TextEdit.

- Ensure the file is named exactly robots.txt (case-sensitive).

- Place the file into the root directory of your website (e.g., www.example.com/robots.txt).

- Follow the Robots Exclusion Protocol for proper syntax.

Create a robots.txt file

Start by drafting the file with basic directives. This is how a basic robots.txt file might appear:

User-agent: *

Disallow: /private/

Allow: /

In this example:

- User-agent: * applies to all bots.

- Disallow: /private/ blocks access to the /private/ directory.

Allow: / permits access to all other parts of the site.

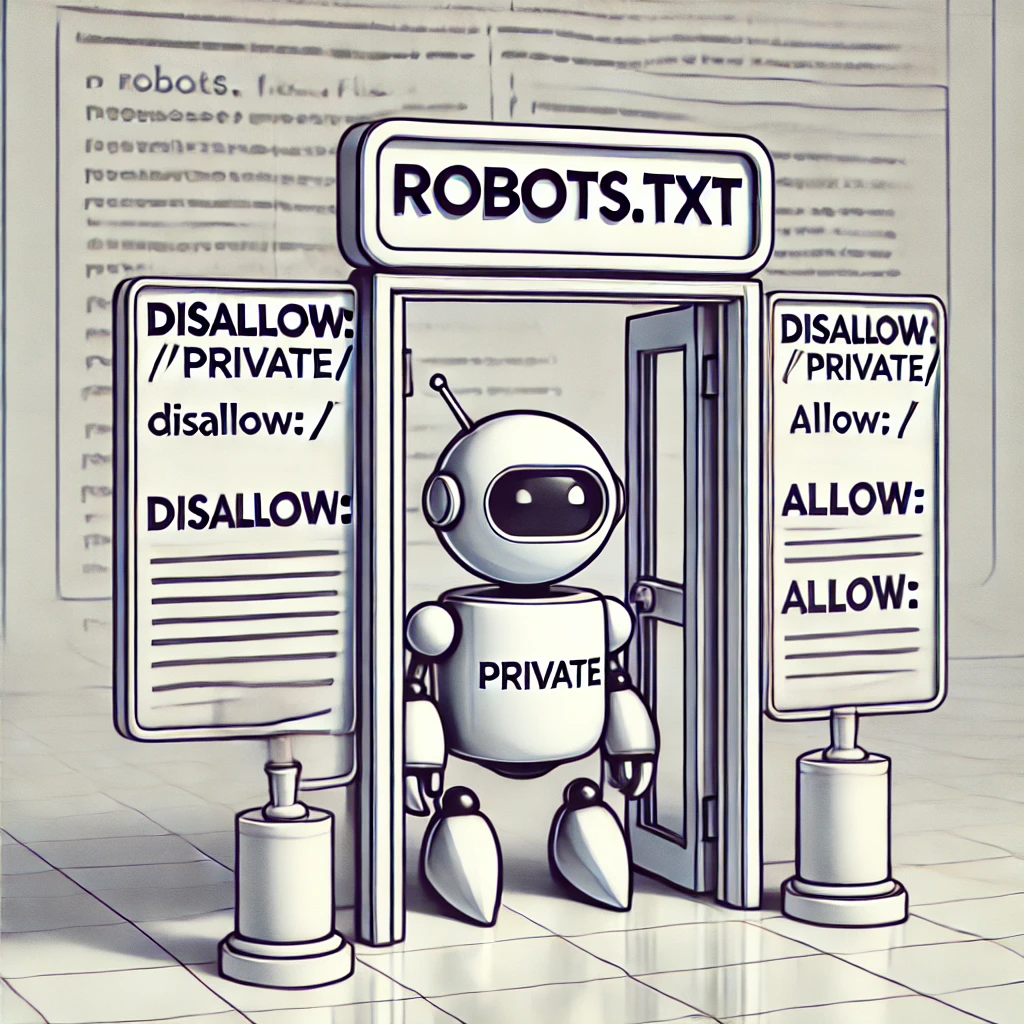

How to write robots.txt rules

- User-agent: Specifies which bot the rule applies to.

- Disallow: Prevents bots from accessing specified paths.

- Allow: Grants access to specific paths (used with Disallow for partial permissions).

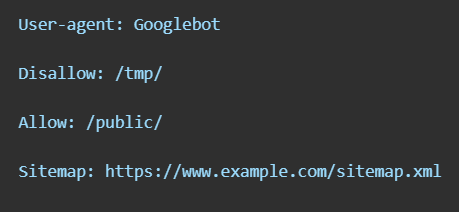

Example:

User-agent: Googlebot

Disallow: /tmp/

Allow: /public/

Sitemap: https://www.example.com/sitemap.xml

Upload the robots.txt file

To make your robots.txt file active:

- Access your website’s root directory using FTP or your hosting provider’s file manager.

- Upload the robots.txt file to the root directory.

Test robots.txt markup

Use tools like Google’s Robots Testing Tool in Search Console to verify your file’s syntax and functionality. This step ensures there are no errors that could block important pages unintentionally.

Submit robots.txt file to Google

After testing:

- Go to Google Search Console.

- Navigate to the “Crawl” section and choose “robots.txt Tester.

- Use the “Submit” option to notify Google of any updates.

Useful robots.txt rules

Here are some commonly used directives:

Block all bots from accessing a specific directory:

User-agent: *

Disallow: /example-directory/

Allow Googlebot but block other bots:

User-agent: Googlebot

Allow: /

User-agent: *

Disallow: /

Update your robots.txt file

As your website grows, you may need to update your robots.txt file. Follow these steps:

Update your robots.txt file

As your website grows, you may need to update your robots.txt file. Follow these steps:

Download your robots.txt file

Get the latest version of your robots.txt file by:

- Accessing it directly via your website URL (e.g., www.example.com/robots.txt).

- Using an FTP client or file manager to download it.

Edit your robots.txt file

Open the file in a plain text editor and make the necessary changes. Ensure the syntax is correct and aligned with your updated requirements.

Upload your robots.txt file

Replace the old file with the updated version in your website’s root directory.

Refresh Google’s robots.txt cache

Google may cache your robots.txt file. To refresh:

- Use the “robots.txt Tester” in Search Console.

- Request Google to crawl the updated file.

How does Google interpret the robots.txt specification?

Google adheres to the Robots Exclusion Protocol but adds its own interpretations for enhanced functionality:

- User-Agent Matching: Googlebot matches rules based on the most specific User-agent directive.

- Crawl-Delay Ignored: Unlike some other bots, Googlebot does not support the Crawl-delay directive.

- Sitemap Support: Google recognizes the Sitemap directive in robots.txt for efficient crawling.

- Error Handling: If robots.txt is unreachable, Google assumes all pages are crawlable.

By understanding how Google interprets robots.txt, you can optimize its usage for better control over your site’s visibility in search engines.

A well-crafted and regularly updated robots.txt file is a cornerstone of effective SEO management. It ensures search engine bots focus on your site’s most valuable content, helping you achieve better rankings and a more efficient crawl strategy.